Informatics Students Create Codebase for Video Action Model

Studies |

Interactive Worlds with Memory

Google’s latest version of the Genie model can convert simple text inputs into dynamic, interactive 3D worlds, known as video action models. Compared to its predecessor, Genie 3 also supports real-time interactions. The environment can remember the user’s actions and remains consistent for several minutes. This consistency is one of the biggest challenges AI models face when creating 3D worlds. Genie 3 is thus a milestone on the road towards “world models,” meaning AI systems that can represent, understand, and simulate virtual environments.

Informatics Students Publish Training Code

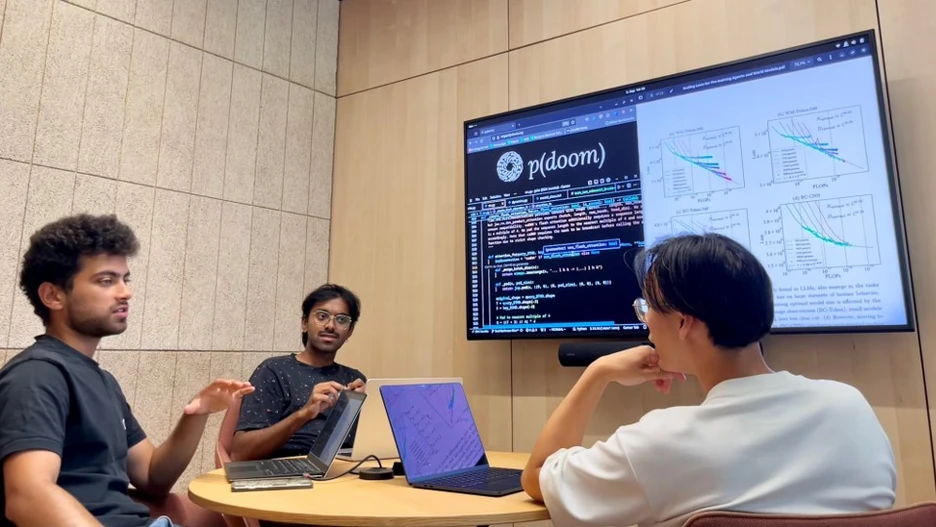

Google’s latest world model was trained using thousands of hours of gameplay videos, but Google itself does not disclose the training code for this model. Franz Srambical, Mihir Mahajan, and Alfred Nguyen, members of Professor Stefan Bauer’s Algorithmic Machine Learning and Explainable AI research group, have now succeeded in creating a scalable codebase for training models such as Genie 3. What is particularly remarkable is that the three computer scientists are not doctoral candidates, but student assistants. The complete codebase is published on GitHub and described in a blog post.

Tool for Capturing the Process of Human Software Engineering

In addition to the training code, Stefan Bauer’s team also recorded the entire software engineering process during the creation of the codebase and published it as a dataset. To do so, they used “Crowd-Code”, a tool also developed by the students for recording the workflow of programmers. It enables students and researchers without any AI knowledge to contribute to a dataset that will be essential for training the next generation of language models.

A Step toward General AI

In addition to applications in gaming and education, video action models can also be used to train AI systems to perform various tasks: Instead of learning and acting in the real world, AI agents or AI robots learn and act in a simulated 3D environment. This allows researchers to avoid many of the challenges of training in the real world. The latest video world models thus represent an important step toward Artificial General Intelligence (AGI), an AI system that, like humans, is capable of learning across a wide range of contexts and applying this knowledge in different situations.